THANK YOU FOR SUBSCRIBING

Low & No Code Platforms in an enterprise scenario. A practical guide on how to ensure business value & compliance with IT processes and standards

Andreas Kurz, Digital Office Head of Business Intelligence, Alfagomma Group

Andreas Kurz, Digital Office Head of Business Intelligence, Alfagomma Group

Introduction

In today’s rapidly evolving business landscape, it is crucial for organizations to respond to changes by providing digital and system supported solutions for emerging business requirements. This puts tremendous pressure on the CIO and the IT organisation since traditionally, it is them to provide those solutions.

Truth though is, that in most non-tech companies, the IT department does not have the capacities and/or capabilities to implement those requirements. Approaches of implementing (custom) software with external partners can be expensive and time-consuming, often hindering a company’s ability to adapt quickly.

As a response, business units are increasingly turning to low code platforms. Low code platforms provide a simplified development environment that enable nontechnical business-users to build applications with minimal, simplified coding or by just dragging and dropping pre-build components into a single process. Popular low-code platforms are Microsoft PowerApps as well as UiPath Software Robots. The benefits of those platforms are very intriguing to the business:

• The "development environment" and language is comparable to the Microsoft Office Suite. Business user immediately feel home and it takes no time to get the first apps up and running

• Build in functionalities like screen recording and replay are similar to the good old macro recording in Excel. The only difference is that the record & replay is not limited to excel but covers any kind of user interaction on the screen.

Problem Statement:

With those offerings it is obvious why business users love low-code platforms. Firstly, they enable the business to rapidly develop and deploy applications, ensuring a high level of agility in response to ever-changing market conditions and customer needs. By simplifying the development process, low code platforms empower not only developers but also non-technical users, such as business analysts and subject matter experts, to create tailored solutions to address specific business requirements. Last but not least, the democratization of development fosters a culture of innovation. It encourages the business to experiment with new solutions and explore disruptive new processes quickly at no or low cost.

Sounds too good too be true? Unfortunately, yes. The benefits of low-code platforms are often outweighed by the technical debt low-code platform are introducing to the organization:

While low code platforms offer simplified prebuild components, they do not provide the same level of customization and flexibility as traditional programming. Complex business requirements or company-specific use cases usually necessitate inefficient workarounds or additional use of components. What could have been one line of code suddenly is a whole workflow. This decreases maintainability and stability of the solution.

Low code platforms must often interact with existing IT systems and infrastructure. Integration can be complex, particularly if the low code platform lacks robust support for APIs, data formats, or communication protocols required by legacy systems. Business user usually opt for GUI-based integration scenarios. This inevitably results in major problems since interaction with the GUI is not predictable as well as changes to GUI and process will certainly break the application.

In addition to that, ensuring governance and information security is challenging. Low code platforms can introduce new risks, as non-technical users might inadvertently create vulnerabilities or overlook compliance requirements. The best example for this is the usage of personal credentials for system logins or un-hashed storage of passwords.

Low code platforms lack robust version control and integration with version control systems. This makes tracking changes, managing parallel development efforts, and ensuring that modifications do not introduce unintended consequences, incredibly difficult. The absence of version control will complicate testing and the deployment process as well as increase the potential for errors and delays.

As a result, low code platforms lead to the development of monolithic applications, in which various components are tightly coupled together. The developed systems are non-modular and hard to maintain or update. When a change occurs in the overall scenario or a specific component, the low code solution breaks or require extensive rework. This will ultimately hinder the agility and adaptability of the system, undermining one of the key benefits of low code platforms.

The list of concerns is not meant to be complete. However, those where the most critical issues we faced when introducing low code solutions to the Organization.

Does this ultimately mean, organisations need to keep their hands off from low code platforms? Certainly no. But they clearly need to understand the benefits and risks associated with low code platforms and introduce them to the business and processes on best-fit basis.

Solution:

Having seen and implemented multiple low code solutions, we developed a guideline for usage of low code platforms. There are two scenarios for which the usage and implementation of low code platforms is recommended: Rapid Prototyping and Micro-service based Engineering.Rapid Prototyping is applied when a business problem requires a technical solution and the budget for that solution is available. In this scenario, a process or application which solves the business problem is developed quick-and-dirty with the clear goal to not be used in any production scenario. By this, we leapfrog the requirements engineering phase and directly involve the business user in the development process. The benefits of this approach are the very tangible definition of business requirements which usually cannot be achieved via a regular requirements engineering document. In written down requirements, business user usually do not consider edge cases or define the requirements detailed enough for developers to implement. When using rapid prototyping, the business user is confronted with those edge cases early in the process and can provide the solution. In addition to that, the creativity is sparked. With rapid prototyping, very creative and operational ideas on how to solve the business requirement in the final product emerge. For the actual developer, this approach also has tremendous benefits since the business requirements are tangible and pretested which ultimately leads to shorter time to market, less ambiguity on what is needed and ultimately in lower cost of a custom implementation.

In contrast, Micro-serviced based implementation does consider the productive deployment of the application or processes build with the low code platform. However, this approach aims to reduce the amount of business processes build on the low code platform to the greatest extend as well as requires the developer to modularise the solution into individual components which exactly fulfill a single use case. The advantage of this approach is, that by design, the usually monolithic infrastructure is broken up into individual services. Additionally, this leads to the reduction of business processes implemented on the low code platform to those requirements, which are most valuable to the business. All other components of the process are integrated into existing or custom made Microservices which are build and maintained by the IT Organization.

The following article focuses on the implementation of the micro-serviced based approach on the example of the integration of UiPath Robotics Process Automation (RPA) and server-less Function apps on MS Azure.

Practical Implementation:

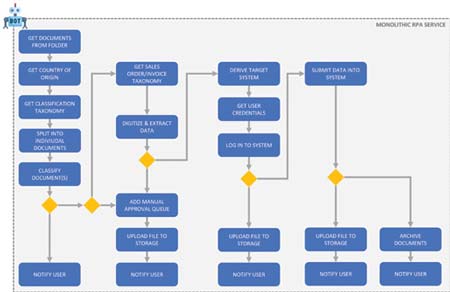

A very popular use-case for the adoption of RPA is the automated processing of documents for accounts receivable (AR) and accounts payable (AP). In those use cases, instead of a human operator, a software robot is picking up customer sales orders and supplier invoices, then digitizes those documents into machine-readable formats using Object Character Recognition (OCR) engines, splits the documents into individual document types, classifies them, extracts relevant information based on classification (e.g. Document Type Salesorder = Extract Salesorder number, Document Type Invoice = Extract Invoice number) by using Artificial Intelligence (AI) Models, takes all data extracted and logs finally into the ERP System using user or non-personal credentials to submit on behalf of the user the corresponding document to the ERP.

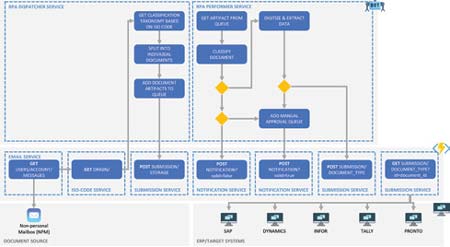

Below is a high level architecture of the AR/AP automation scenario using UiPath RPA.

AR/AP is a very prominent use case for RPA since the process is highly standardised and manual. Imagine a customer sales order containing 100 line items or individual order items. The manual processing of those documents can take up between 10–30 min per document for a seasoned operator. Some operations receive 100s of sales orders daily. Since RPA run 24/7 its on top of the list of digitization activities of any CFO and CEO.

Independent of the significant cost saving potential, the solution is highly complex when implemented in an fully automated, enterprise-grade scenario:

• Ensuring error handling

• Ensuring logging and monitoring of the solution

• Secure login into Systems (e.g. Non Personal Mailbox, ERP System).

• Considering edge cases and exception management.

The proof of concept of the process can be achieved within several weeks without prior knowledge on UiPath. Also, required components to fullfil the business processes are generally available. However, elevating the process from a so called attended process (= user requires to monitor and interact with the robot) to an unattended, server-run process which includes required error handling, logging, secure logging as well as exception management if far beyond the scope of the business user.

Considering the additional disadvantages described initially, the value add of the solution is diminishing.

After through analysis of the overall solution architecture, there are essentially three major business processes for which UiPath is needed:

• Digitization, splitting and classification of Documents

• Extraction of Data using out of the box and retrainable

AI Models as well as manual validation via Action Center to retrain models based on manual validation.

UiPAth has a pre-build package for those activities which is labelled "Intelligent Document Understanding". Those are a collection of activities in the UiPath editor, which can be easily configured and which take on a major overhead of the complex activities required to digitize, classify and extract data from documents as well as to manage the sophisticated AI models in the background.

All other business processes like logging in into nonpersonal mailboxes, retrieving user-specific information, logging and notification as well as the validation and submission of data to the ERP system are features which are not unique to the solution (multiple other systems or processes require the same services or functionalities) as well as can be implemented and managed in traditional programming languages significantly more efficient.

Hence, the decision was, to only implement the core intelligent document understanding processes in UiPath, design the individual processes in separate Microservices and embed those document understanding processes into the existing server-less architecture by letting the robot interact with corporate APIs, which are taking over the overhead for logging, error handling, notification of users as well the submission to the ERP systems. Following new, micro-service based business process applies:

• Access to NPM managed via Microsoft Graph API

• RPA Dispatcher Service: Triggered every 2hs. The only purpose of the dispatcher is to access Graph API from above, retrieve all emails from NPM with attachment, digitize and split the documents and store the artifacts in the Document Understanding Job Queue of UiPath as well as submit the downloaded attachment to the corporate data lake on Azure Blob Storage for further processing

• Submission API: Corporate API services which handles all interaction with the corporate data lake and data warehouse. Submission API has endpoints for storing data in the Blob Storage. Storage and validation as well as error handling is centrally managed by the API.

• RPA Performer Service: Triggered whenever a new artifact hits the Document Understanding Queue. The performer fulfils the remaining tasks of the intelligent document understanding process: Extraction of Data as and retraining of AI Models. Since the performer is decoupled from the Dispatcher as well as the overall process, multiple Performer Tasks can be executed simultaneously.

• Notification API: In case of not successful extraction, results are submitted to the notification service API. The notification API is an existing corporate service, which sends email notification to corporate users based on request body and parameters. The emails are standardised and centrally managed by the Digital Office. This ensures, that the email notifications are send from a unique mailbox as well as are designed based on corporate design guidelines. Also, error handling and monitoring is build into the service.

• If the Extraction was successful, the extraction results are submitted via the previously mentioned submission API. The API will validate and enrich the data with additional master and meta data from existing corporate data warehouse processes. This data is then centrally available and is automatically further processed for submission to the corresponding ERPs.

• The successful triggering of the submission API will automatically trigger additional processes:

• Archiving of the successfully extracted documents on the corporate data lake into the corporate digital archive based on standardised naming and storage convention for audit purposes.

• Notification of business user that the document has been successfully processed.

• Preparation of ERP specific data submission (e.g. via API or EDI formatter). The actual high-level architecture is described below. The benefits of this approach are:

”Low Code Platforms Must Often Interact with Existing it Systems and Infrastructure. Integration can be Complex, Particularly if the Low Code Platform Lacks Robust Support for Apis, Data Formats, or Communication Protocols Required by Legacy Systems”

• Decomposition of initial monolithic and hard-to-maintain architecture into individual, loosely coupled Microservices interacting with each other via documented APIs.

• Re-usable components enabling use-case agnostic usage of services (e.g. notification service is used by multiple applications for application specific use castes, stored data in Database is used for customer cross referencing by sales department).

• Overall solution is centrally managed in a single Git Repository and decomposed into the main components:

• 1. API Services

• 2a. RPA Performer

• 2b. RPA Dispatcher

• Clear separation of development, integration and production environment.

• Breakdown into Microservices using Azure Function apps offer out off the box application insights and monitoring of application health. Debugging but also enhancement of the system is simplified since services are separated and encapsulated.

• Secure and central management of environment variables, login information and passwords via secure Azure DevOps libraries.

• No Vendor login and simplified replacement of individual components (e.g. replacement of UiPath via other 3rd party solution possible). The used functionalities of UiPath are limited to the core activities of Document Understanding Process, all other components are based on existing corporate services.

In addition to that, due to the shared responsibility and collaboration on eye level, business and IT teams both contributed to the implementation by providing the relevant expertise without the frustration of the business to take on mundane IT tasks, whereas IT can ensure operations and health of all affected systems while keeping all corporate requirements on IT and application security under their control.

Outlook:

With the implementation of the micro-serviced based approach for Intelligent Document Understanding using UiPath and Azure Server-less Functions, the benefits of low code platforms as well as server-less function app architecture are successfully combined. Every component of the process can be individually maintained and enhanced as well as reused for other corporate processes. Due to its design, the process is very robust and easy to monitor and maintain. There is a strong collaboration between Business and IT as well as a clear segregation of duties: business user are responsible for the maintenance of the model, taxonomy and training of the robot whereas the IT team is ensuring operations, and overall performance of the solution stack. The whole process is integrated into a single GitHub Repo and managed via Azure DevOps CICD pipelines. The continuous integration and deployment of new features or bug fixes is hence automated and standardised. Due to the fact, that only the Document Understanding Activities of UiPath are used, the solution is very flexible to integrate other providers to prevent a vendor lock-in. As a result, we are currently evaluating the usage of Large Language Models like GPT to take over the tasks of UiPAth to improve extraction results and performance but also decrease licensing cost by UiPath.

Weekly Brief

I agree We use cookies on this website to enhance your user experience. By clicking any link on this page you are giving your consent for us to set cookies. More info

Read Also

Incorporating the power of recognition into our vendors' sustainability journey

Elevating Guest Experience with Data

Empowering Educators with Faith, Excellence and Purpose

Leading with AI: From Ethics to Enterprise Impact

Done Today Beats Perfect Tomorrow: The New IT Advantage

The Shift from Cybersecurity to Product Security: A Business Imperative

Advancing Retail through E-Commerce, Cloud and Cybersecurity

Transforming Risk Management into Strategic Business Advantage